|

Lane Detection and Quality Assessment

|

||

We detect and quantify the quality of the immediate left and right lane

boundaries with respect to the vehicle due to their importance in guiding

the vehicle. We assume that the vehicle is equipped with one front view

camera, one LIDAR system, and GPS/IMU units, which are common sensory

configurations for autonomous vehicles.

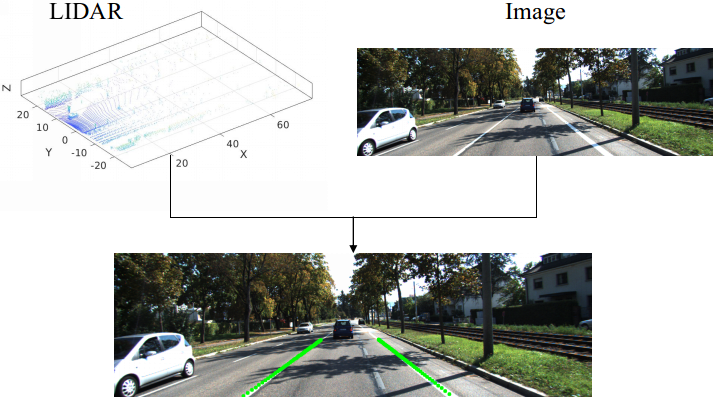

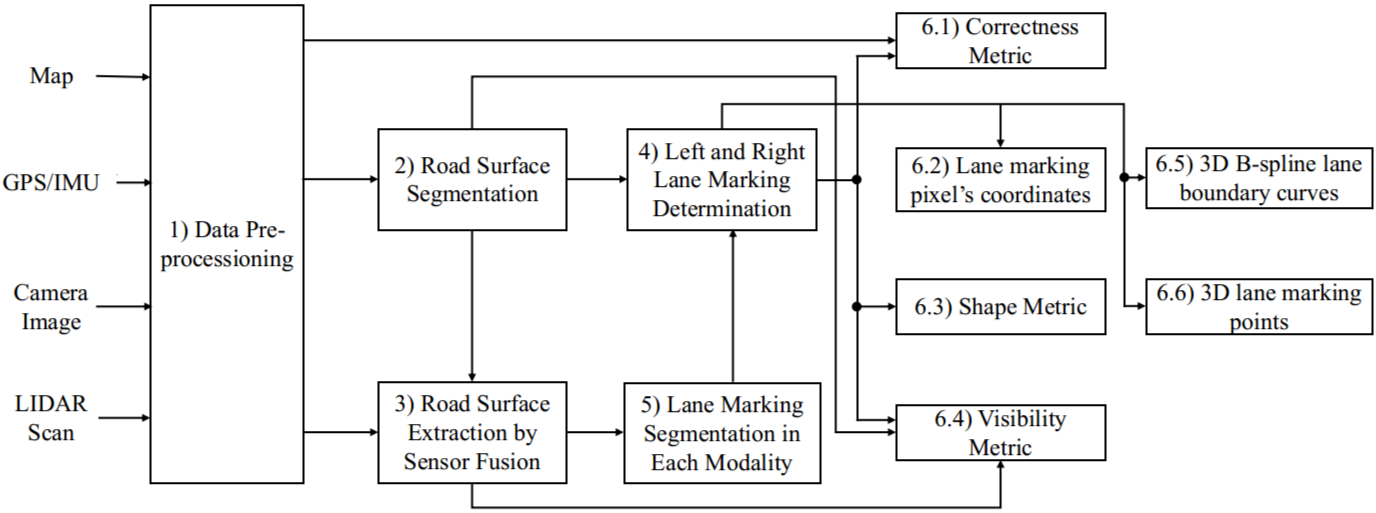

Fig.1. Sample sensor input and output Fig.2. System diagram The software requires the sensory data to satisfy the following conditions:

|

||

| |

||

|

GitHub:

[internal] [external]

Please click external if your domain is not within tamu.edu domain. Binary: [Demo] (Ubuntu 16.04) Data: [KITTI synced + rectified data] | ||

| |

||

|

As more and more companies are developing autonomous vehicles (AVs), it is important to ensure that the driving behavior of AVs is human-compatible because AVs will have to share roads with human drivers in the years to come.

We propose a new tightly-coupled perception-planning framework to improve human-compatibility.

Using GPS-camera-lidar multi-modal sensor fusion, we detect actual lane boundaries (ALBs) and propose availability-resonability-feasibility tests to determine if we should generate virtual lane boundaries (VLBs) or follow ALBs.

When needed, VLBs are generated using a dynamically adjustable multi-objective optimization framework that considers obstacle avoidance, trajectory smoothness (to satisfy vehicle kinodynamic constraints), trajectory continuity (to avoid sudden movements),

GPS following quality (to execute global plan), and lane following or partial direction following (to meet human expectation).

Fig.1. Sample output. Green curves are the VLBs generated by our algorithm. | ||